Design reuse without verification reuse is useless

Abstract

For years, design productivity has been assisted by increasing levels of reuse. Many system-on-chip (SoC) designs now contain 10s or even 100s of reused intellectual property (IP) blocks that can constitute in excess of 90% of the gates in a chip. In addition, the size of IP blocks has risen from small peripherals to entire subsystems.

What has happened to total productivity over this same period? Productivity is being constrained by verification, but verification has not seen its reuse needs met by models and tools available on the market. As a result, verification continues to take a greater percentage of total time and budget, constraining product innovation that would otherwise be possible.

While some verification IP (VIP) is available, there is not enough and it does not provide the levels of reuse necessary. As SoC design moves to platform IP, where is the corresponding platform VIP? Where are the fully defined verification environments for platform IP that can be extended to add additional functions without having to understand the parts of the platform that are not being modified? Why is verification reuse so far behind design reuse?

These issues are explored in this article along with a way in which full IP-to-SoC verification reuse can be enabled through the use of scenario models.

Introduction

Over the past decade, designer productivity has been boosted by reuse. Systems are composed of intellectual property (IP) blocks, each having a well-defined function, and the implementation for these blocks can be used in multiple designs and shared between multiple companies.

This is the IP methodology used to fill a significant portion of the real estate in a chip. IP reuse has allowed companies to concentrate on key parts of their design –– parts that add value and make products competitive. Over time, the size of IP blocks has grown and has extended to what is often called platform-based design where a processor, interconnect and a significant number of peripherals, accelerators and other functions are pre-integrated and pre-verified.

When IP is acquired from a third party, it is expected that a verification environment will be shipped with it. This environment was used to verify the block or platform in isolation. It enables users of that IP to ensure that the block has been integrated into their environment correctly and to see the extent of the verification that was performed. The environment may also come with some verification components that can be reused for system-level integration and verification, such as coverage models. The standard Universal Verification Methodology (UVM) [1] enables the reuse of other verification components through sequences and virtual sequences.

While the numbers are different for every design house, verification consumes a significant amount of the total resources and, in many cases, more than half of the time, effort and cost. Therefore, verification productivity improvement should be as good as, if not better than, design productivity improvements; otherwise the total benefits will not be as significant as expected.

However, this is not the case. The situation was put in stark contrast at a keynote [2] given by Mike Muller of ARM at the 2012 Design Automation Conference (DAC). He said that while design productivity has roughly scaled with Moore’s Law, verification productivity has gone down by a factor of 3,000,000 as measured by the amount of time it takes to build and run tests. This is not a good state of affairs.

Finally, the objective of system-level verification is not to verify the implementation of IP blocks. It is to verify that the system is capable of supporting the high-level requirements and delivering the necessary functionality and performance. This requires a different type of verification compared to verification conducted at the block level. The best way to capture these requirements is by scenario models that define expected end-to-end behavior of a system.

Verification IP

Verification IP (VIP) reuse is an important issue for EDA vendors and users alike. They defined a series of methodologies, most recently the UVM standard, to ensure VIP compatibility and extendibility and to attempt to maximize the effectiveness of constrained-random test generation.

Ideally, VIP should be reusable from the IP block level all the way to the full-SoC or system level, providing plug-and-play so that multiple VIP models work together. VIP must also provide coverage metrics so that the verification team has a way to measure verification closure. This coverage must be integrated into the IP or SoC verification plan.

The primary form of commercial VIP is protocol VIP, specifically designed to ensure that a design is adhering to a defined interface or bus protocol. This type of VIP is generally available for all standard bus protocols as well as for other communications protocols such as USB and SATA. These provide protocol checking and usually have coverage models and a set of sequences defined for them. They can be used by a constrained-random generator to verify an IP block standalone and, at least in theory, synchronize and coordinate activities on the periphery of the SoC.

The UVM Approach

The adoption of SystemVerilog and methodologies such as the UVM has enabled human time and effort to be replaced by compute power for block-level verification. As block size has grown, these systems have adapted to overcome the inherent limitations of the constrained-random generation process. However, a fundamental limitation still exists because the process becomes less efficient as the sequential depth of the system increases.

Figure 1 shows a typical UVM verification component (UVC), a form of VIP defined by the UVM standard. Constrained-random test generation limitations are partially overcome by the creation of sequences that allow snippets of functionality to be defined while still allowing some degree of randomization. As shown in Figure 2, when several blocks are integrated together, virtual sequences are used to create higher-level sequences. These virtual sequences call on capabilities of lower-level sequences or virtual sequences.

At each level of integration, a new set of virtual sequences has to be created that combine lower-level sequences. If a new IP block is added at the same level of the hierarchy, the virtual sequencer may have to be modified or rewritten. This bottom-up methodology is problematic because it does not necessarily drive the system verification task in the same direction as required by the desired system-level functionality. Many SoC teams have had difficulty using the UVM at the system level and getting the levels of reuse that they desire in the verification IP hierarchy.

The problems start when larger blocks of IP and platforms are considered because many of them contain processors. Constrained-random test generation was designed for block-level verification and not system-level verification. As soon as the system contains one or more processors, the verification team has a choice to make:

- Remove the embedded processors and drive transactions onto the bus

- Run the production software on the embedded processors or

- Write custom software that will exercise the system

All have problems that will be discussed briefly in the following sections.

Removing the Processors

Constrained-random test generation is a transaction-oriented verification solution, similar to driving packets onto the bus that had been driven by a processor now removed. This solution allows the maximum flexibility in terms of what can be sent into the rest of the hardware.

The problem is that this traffic is not realistic unless scenarios are created in the verification code to perform functions representative of the system’s operation. This involves knowing how to set up the peripherals to perform certain functions and generating the correct sequence of events on the bus to make data move through the system in a useful manner.

The UVM breaks down when a processor is added to the system, as shown in Figure 3. VIPs are not available for processors and there’s no way to write sequences that mimic the flow of data through a processor. Thus, with a UVM verification strategy, the usual option is to remove the processor and drive it with a bus functional model (BFM) that enables transactions to be driven onto the bus. Again, this approach may not produce realistic system-level traffic.

Running Production Software

Few designers would object to running production software on the system to see if it behaves as expected. This is the heart of hardware/software co-verification and many companies include this step as part of the sign off process.

However, it is unlikely that software will be available in the right timeframe. Prior to tapeout, a Catch-22 [3] situation is created. The hardware design is not ready and stable so that running software may be unreliable, but the software cannot be debugged and stabilized without executing it on stable hardware –– a difficult way to perform system-level verification and debug.

In addition, relying only on the execution of production software provides inadequate testing. Production software tends to be well-behaved and not stress corner cases in the design. Further, if the software were to change and exercise different flows through the hardware, bugs lurking in the design could restrict the versions of software that could be run. Finally, production software usually runs slowly in simulation. Booting a real-time operating system (RTOS) on an embedded processor is feasible on a hardware accelerator, but this option is not available to all SoC verification teams.

Writing Custom Software

Of course, it is possible to handwrite custom software to run on the processors and could be at two different levels. First, directed tests could be written that move data through the system in a specific manner. This may use some of the production software, such as drivers, to create a hardware abstraction layer so that the test developer does not need to know details, including peripheral configuration. Directed tests suffer from many of the same problems as running production software, in addition to the time it takes to write the tests.

Alternatively, software could be written that is more specific for verification, analogous to the process of writing code for built-in self-test (BIST). While multiple tests could be written, this tends to be customized code that may not be useable when the platform is modified or extended. It gets successively more difficult as additional processors are added into the system, especially if they are heterogeneous in nature. Moreover, humans have trouble visualizing parallel activity within the SoC, making it difficult to handwrite multi-threaded tests for multiple embedded processors.

A Solution for Platform VIP

When using platform IP, or when an integrated subsystem or system contains one or more processors, it is important to ascertain the verification process objective. It is no longer to verify the implementation of blocks, but rather to verify that data can move through the system correctly with the expected latency and bandwidth. This requirement is not met by the UVM for systems that contain processors because there is no suitable VIP that exists at the block level to support the platform.

It is important that additional IP can easily be added to these platforms and the same demand should be placed on the VIP that goes along with it. This requirement is not well met by the UVM either since it requires rewriting virtual sequences. It would be ideal if the same model can be used as the verification plan and coverage model rather than having to write additional models for each. The current UVM methodology is cumbersome on both counts.

A solution exists that matches all VIP requirements and describes the flow of data through IP blocks based on possible outcomes. All possible outcomes for a block are described along with the manner in which that outcome can be created. When multiple blocks are connected together, more complex flows are created with one block establishing demands and other blocks providing the necessary data or conditions. Those blocks in turn establish demands. Thus, a cascade of demands and ways to meet those demands are created by the VIP for each IP block.

When a verification target (one of the outcomes) is specified, a solver finds a possible path through all of the blocks to achieve that outcome. A generator automatically writes the necessary C code to run on the embedded processors. The combined VIP is the set of all possible behaviors and becomes the coverage model as well as the source of both stimulus and result checking. The selection of outcomes becomes part of the verification plan. This form of VIP is a scenario model that can be built incrementally and, as additional scenarios are incorporated, more extensive tests can be generated, or more ways to achieve desired outcomes explored.

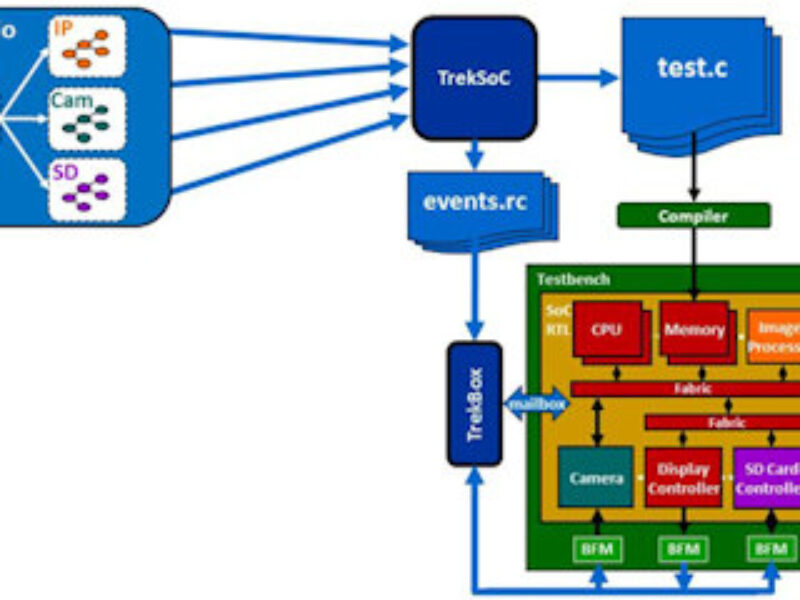

Figure 4 shows a commercial example of this approach, the TrekSoC EDA product [4] from Breker Verification Systems. TrekSoC reads in a hierarchy of graph-based scenario models that define all the possible outcomes for the SoC as well as the ways to achieve these outcomes. It then solves for paths through the SoC and automatically generated multi-threaded, self-verifying C test cases to run on multiple embedded processors. Since some of these test cases will send or receive data on the chip’s I/O ports, TrekSoC also coordinates the embedded processors with testbench BFMs, including standard UVCs.

Unlike UVM virtual sequences, scenario models are directly reusable at higher levels of hierarchy. In Figure 4, scenario models for the image processor, camera and SD card controller blocks are instantiated with no change in the SoC scenario model, a platform-based verification solution, with platform VIP as reusable as platform IP.

If VIP for a platform is provided via a scenario model, the addition of a new block creates new ways in which demands can be met or outcomes created. The integration of this new VIP does not require rewriting or extension of existing VIPs. The verification plan and coverage model is also cumulative. Finally, scenario model VIP is implementation independent, working equally well with a virtual platform or a register transfer level (RTL) implementation of the IP blocks and SoC.

Conclusions

In order to continue increasing the productivity associated with the design and verification of complex systems, reuse has to be made scalable and must have a similar impact on both design and verification. Additional functionality is being instantiated at the system level and constrained-random generation is failing to provide capabilities required to verify this functionality.

While platform design IP has increased the efficiency of the design process, VIP has failed to deliver similar gains. A new method of describing VIP, scenario models, is suitable for platform IP. It is reusable and extensible with built in notions of coverage and verification planning.

Without a migration to scenario models, the SoC development process will become even more constrained by the verification task.

References

[1] Universal Verification Methodology (UVM) 1.1 User’s Guide, Accellera, 2011.

[2] “ARM CTO asks: Are we getting value from verification?” by Brian Bailey, EDN, June 13, 2012. https://www.edn.com/design/integrated-circuit-design/4375333/ARM-CTO-asks–Are-we-getting-value-from-verification-

[3] Catch-22 by Joseph Heller, Simon & Schuster, 1961.

[4] “Verifying SoCs from the Inside Out” by Thomas L. Anderson, Chip Design, August 3, 2012. https://chipdesignmag.com/display.php?articleId=5153

About the author:

Adnan Hamid is co-founder and CEO of Breker Verification Systems. Prior to starting Breker in 2003, he worked at AMD as department manager of the System Logic Division. Previously, he served as a member of the consulting staff at AMD and Cadence Design Systems. Hamid graduated from Princeton University with Bachelor of Science degrees in Electrical Engineering and Computer Science and holds an MBA from the McCombs School of Business at The University of Texas.

Adnan Hamid is co-founder and CEO of Breker Verification Systems. Prior to starting Breker in 2003, he worked at AMD as department manager of the System Logic Division. Previously, he served as a member of the consulting staff at AMD and Cadence Design Systems. Hamid graduated from Princeton University with Bachelor of Science degrees in Electrical Engineering and Computer Science and holds an MBA from the McCombs School of Business at The University of Texas.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News