Addressing the challenges of autonomous driving

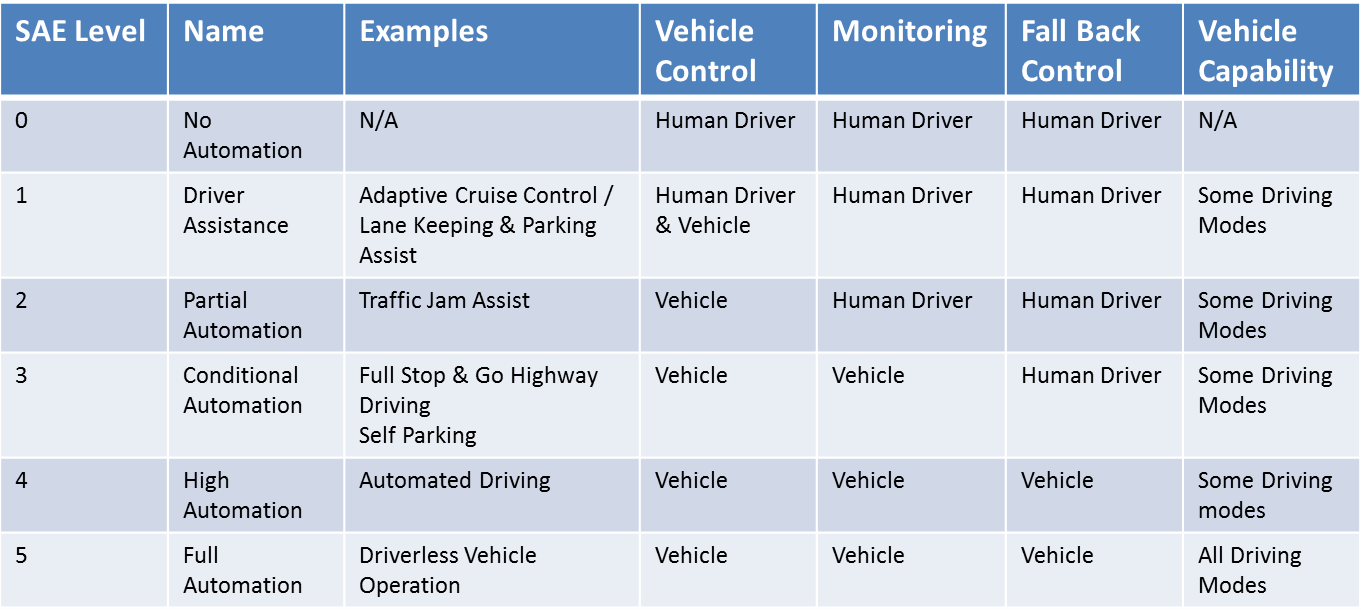

The term autonomous driving is a complex area which spans a range of capabilities from fully autonomous to shared control with the driver. To help classify the different autonomous capabilities the Society of Automotive Engineers has defined several levels which outline the autonomy:

SAE autonomous driving level definition.

SAE autonomous driving level definition.As the level of autonomy increases, so too does the need for the vehicle to comprehend its environment and safely act within this same environment. Enabling the vehicle to understand and safely interact with its environment requires a number of sensor modalities from ultrasonic, GPS, RADAR, and cameras to LIDAR along with associated processing capabilities.

Each of these different modalities provides data on the vehicle’s overall environment; forming a complete picture requires the autonomous vehicle fusing these elements together. The different sensor deployments and modalities will vary depending upon the level of autonomy being implemented. However, cameras will be used for applications such as lane keeping assistance, blind spot detection and traffic sign recognition, while RADAR implemented as Frequency Modulated Continuous Wave (FMCW) may be used to determine the distances to objects. For levels two and above, the ability to comprehensively understand the vehicle’s environment is crucial. This enables the vehicle to identify its location and surrounding obstacles allowing safe navigation. Understanding of its environment is obtained using camera, RADAR and LIDAR along with global positioning system (GPS) data. GPS data on its own cannot be relied upon as its accuracy varies, while also being easily blocked by buildings and infrastructure.

Understanding their environment and taking actions are key enablers of the autonomous capabilities. Both lives and the environment are at risk as the result of an unintended operation or action at all six levels. As such, autonomous capability must be developed within a framework which ensures safety of the design and all its elements. Developing according to ISO26262 is an absolute must for autonomous vehicles. This standard provides a framework which, if followed, ensures safety, defining several Automotive Safety Integrity Levels (ASIL) along with their allowable failure rates. Autonomous driving solutions will also be subject to a range of harsh environments once deployed in markets around the world. To ensure systems operate across these environments, automotive grade components require extensive qualification and certification to and beyond AEC-Q100.

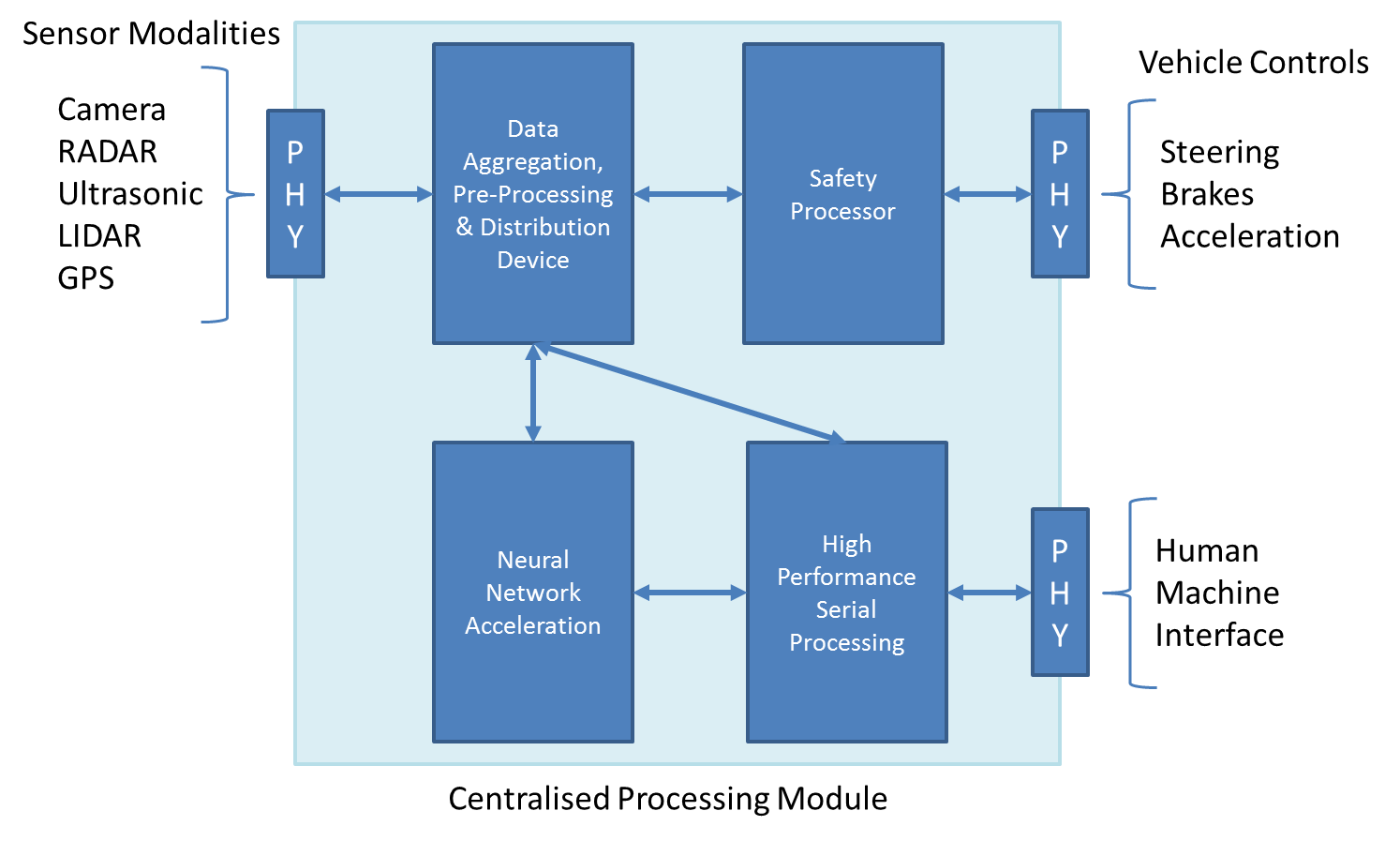

Architecture

At the heart of implementing an autonomous capability is the centralised processing module. To successfully implement autonomous capability, the centralised processing module must contain the following functions:

- Data aggregation, pre-processing and distribution (DAPD) – This interfaces with the different sensor modalities performing basic processing, routing and switching of information between processing units and accelerators within the processing unit.

- High performance serial processing – High performance processing element which performs data extraction, sensor fusion and high level decision-making based upon its inputs. In some applications, neural networks will be implemented within the high performance serial processing.

- Safety processing – Performing real time processing and vehicle control based upon the detected environment provided by pre-processing in the DAPD device and results from the neural network acceleration and high performance serial processing elements.

The creation of a centralised processing module presents the designer with several interfacing, scalability, compliance and performance challenges, along with the traditional Size, Weight and Power – Cost (SWaP-C) , challenges when deployed in a power and thermally constrained environment. These SWaP-C challenges are particularly apparent when addressing the DAPD and Safety Processors.

Addressing the Challenges

One solution to these challenges is to use a single device which can provide not only the interfacing, pre-processing and routing capabilities of the DAPD but is also capable of including safety processing and potentially neural network acceleration within the same silicon. This highly integrated approach enables a tightly integrated solution benefiting the SWaP-C significantly.

Such a highly integrated solution is possible using the Xilinx Automotive Grade Zynq UltraScale+ MPSoC heterogeneous system on chip. This range of devices provides programmable logic coupled with four high performance ARM A53 cores, forming a tightly integrated processing unit. For real-time control, the Zynq UltraScale+ MPSoC also provides a real-time processing unit (RPU) which contains lockstep dual ARM R5 processors capable of implementing safety features up to ASIL C and intended for safety-critical applications. To provide the necessary functional safety, the RPU has been designed with the ability to reduce, detect and mitigate single random failures including both hardware and single event induced. These devices enable efficient segmentation of the functionality between the processor system resources and the programmable logic.

One key challenge presented by the DAPD is that of being able to interface with a range of different sensor modalities, all of which come with differing interface standards. A typical solution will interface with a range of sensor modalities which use high speed interfaces such as MIPI, JESD204B, LVDS and GigE for high bandwidth interfaces such as cameras, RADAR and LIDAR. The DAPD will also be required to interface with slower interfaces such as CAN, SPI, I2C and UARTs. The processing system (PS) and programmable logic (PL) of the Zynq UltraScale+ MPSoC provide support for a range of industry-standard interfaces including SPI, I2C, UART and GigE, while the flexibility of the PL IO enables direct interfacing with MIPI, LVDS and Giga Bit Serial Links allowing higher levels of the protocol to be implemented within the PL, often using IP cores. Implementation of the protocol within the PL also enables standards revisions to be easily incorporated along with providing flexibility as to the number of specific sensor interfaces supported within a solution. The PL also provides the ability to implement any interface with the provision of the correct PHY in the hardware design providing a true any-to-any interfacing capability.

The programmable logic provided by the Xilinx automotive grade Zynq UltraScale+ MPSoC also enables acceleration of neural networks. The parallel nature of the programmable logic allows the implementation of neural networks which are more responsive and deterministic than a traditional CPU/GPU based approach, as the traditional external memory bottlenecks between stages are removed. These neural networks can be implemented with high level languages like C, C++ and OpenCL using the system-optimising compiler SDSoC which enables functionality to be moved seamlessly from the processor system to the programmable logic.

Another challenge is implementing the safety processors. These must act upon commands received from the DAPD and high performance serial processing. These commands enable the safe navigation of the vehicle within its environment. The safety processors are required to interact directly with vehicle controls such as steering, acceleration and braking. This is a critical aspect of autonomous driving as errors here can result in the loss of life or damage to the environment. The Xilinx automotive grade Zynq UltraScale+ MPSoC contains dual lockstep ARM R5 cores within a Real-time Processing Unit (RPU) which can be used to implement the safety processing.

Along with the lockstep capability of the RPU cores, several additional mitigation provisions have also been implemented. This includes the introduction of Error Correction Codes for the RPU tightly coupled memories and the caches, while the DDR memory is protected with Double Error Detection Single Error Correction codes. The inclusion of ECC on caches and memories ensures the integrity of both the application programme and data required to implement the autonomous vehicle control. To ensure the underlying hardware has no faults prior to operation there is also provision for Built-in Self-Test (BIST) during power on. Additional BIST can also be executed at the request of the user during operation as required. The architecture of the Zynq UltraScale+ MPSoC also provides the ability to implement functional isolation of memory and peripherals within the device.

The inclusion of these facilities within the Xilinx automotive grade Zynq UltraScale+ MPSoC, enables the safety processing to be implemented within the same silicon as the DAPD and potentially Neural Network Acceleration. To ensure industry-leading device quality, Xilinx has gathered industry requirements and merged those into an internal quality control programme called Beyond-AEC-Q100. Doubling most of the testing requirements within this framework, Xilinx ensures a healthy safety margin for its automotive devices. Of course, higher integration also reduces the complexity of the PCB design and interconnect required in the final solution while offering a lower power dissipation. Conclusion

The provision of autonomous driving capability requires the implementation of a centralised processing module which faces SWaP-C challenges. A highly integrated solution based on using Xilinx automotive grade Zynq UltraScale+ MPSoC for the DAPD, Neural Network Accelerator and Safety Processor enables the creation of a smaller, lower weight and more power-efficient solution.

About the author:

Willard Tu is Sr Director Automotive Market at Xilinx – www.xilinx.com

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News