Audio rises for event detection

Cars and drivers already hear its sirens well before they can spot an approaching ambulance, said Paul Beckmann, founder and CEO at DSP Concepts in a recent interview with EE Times. Why wouldn’t the automotive industry be interested in audio?

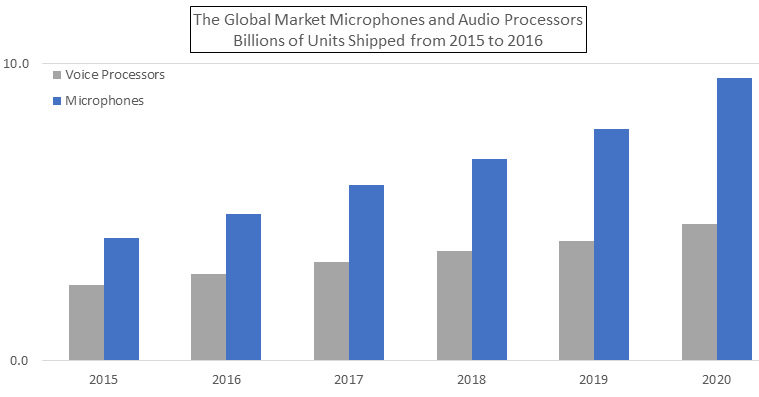

System OEMs — not limited to carmakers — are at the cusp of “using more microphones to generate yet another critical sensory data — audio — for artificial intelligence,” Beckmann explained.

As he envisions it, audio is “heading from pure playback” in entertainment systems to enabling “input, trigger and analytics in contextual awareness.”

The intelligence picked up by microphones can be used by every-day systems ranging from cars to digital virtual assistants and portable devices. “Sight and hearing go hand in hand,” added Willard Tu, DSP Concepts’ executive vice president of sales and marketing. “Dogs barking, babies crying, glasses shuttering, cars honking, sirens wailing, gunshot noise…audio helps systems understand the environment [and the context] better.”

Two developments drive the electronics industry’s sudden exuberance for audio.

One is the proliferation of smartphones with multiple microphones per handset. Second is the popularity of digital virtual assistants like Amazon’s Echo and Google Home. Peter Cooney, principal analyst and director of SAR Insight & Consulting, observed “the increasing integration of virtual digital assistants into common consumer devices is driving awareness and adoption of voice as a natural user interface for many everyday tasks.”

But as to how soon microphones can go beyond offering a natural user interface, and start becoming a genuinely “intelligent sensor,” the industry still waits for a few advances.

To meet the challenge, audio needs microphones that can pick up better quality sound, processors good at post-processing audio, effective algorithms to pre-process audio, easier-to-use audio processing tools, an audio standard equivalent to Open GL used in graphics, and microphones that can remain always-on with minimal power drain.

In short, as Cooney noted, the market demands “always listening technologies, speech enhancement algorithms and microphones.”

ARM running audio

Audio processing used to be specialty required by playback systems such as TVs, DVDs and equalizers in Hi-Fi systems.

Driven by the proliferation of microphones in smartphones and other home devices, the task of audio processing has spread practically everywhere. Specialty audio DSP isn’t the only chip in a system to process audio, either.

As more audio is running on ARM processors, more OEMs are “keenly looking at microphones” as input sensors for AI, DSP Concepts’ Beckmann said.

DSP Concepts is best positioned to observe such a market transition.

Beckmann reported a market uptick for his company’s own audio tools called Audio Weaver over the last 12 months. Audio Weaver, as Beckmann described it, is “the only graphical audio design framework that works cross-platform.”

Industry analysts agree that DSP Concepts holds a unique place on the audio market. Bob O’Donnell, president and chief analyst, TECHnalysis Research, LLC, told EE Times, “I don’t know of many direct competitors to DSP Concepts or their Audio Weave tool. There are many companies who do professional audio editing and audio processing for music and recording purposes, but that’s a different animal.”

Cooney agreed. “I don’t know of any competing products to Audio Weaver.” He added, “DSP Concepts have other products too, such as sound enhancement algorithms (noise suppression, echo cancelation, beam forming), benchmarking and reference designs.”

DSP Concepts doesn’t design or sell DSPs. Yet, competitors are generally other DSP outfits. Audio Weaver competes with audio tools internally created by DSP suppliers such as Texas Instruments or Cirrus Logic. The difference is that those internally developed tools only work on their own chips. In using a platform-independent tool like that of Audio Weaver, “OEMs don’t have to get locked into a specific DSP,” added DSP Concepts’ Tu.

Cooney said that DSP Concepts, by partnering with a number of other companies like Cadence/Tensilica, is in the business of offering audio design solutions to their customers.

In addition to Audio Weaver tools, DSP Concepts licenses a host of audio algorithms that shape microphone input, including beamforming, echo cancellation, noise cancellation and far-field sound. At a time when the industry suffers from a lack of engineering talents well versed in audio processing, the market is clamoring for easy-to-use tools and audio pre-processing algorithms that can isolate sound from unwanted environmental noise, explained Beckmann.

Audio: a stepchild to video

At present, however, using audio for acoustic event detection (and analytics) remains a relatively new practice.

TECHnalysis Research’ O’Donnell told EE Times: “In theory, there could be more dedicated audio processors that do AI, but frankly, audio has always been a stepchild to video and that continues today.”

He added that another big challenge for audio is “language and meaning.” He said, “A picture of a tree is a tree in any language, but understanding words, phrases and, most importantly, meaning and intent are both language- and cultural-specific.” This makes voice recognition and natural language processing very difficult, he added.

The lack of standards in audio is also contributing to gaps, acknowledged DSP Concepts’ Beckmann.

Consider OpenGL, a cross-language, cross-platform API for rendering graphics. The API is critical to video game designers, for example, who want to write code down to the metal. GPU vendors such as Nvidia can optimize their hardware by using the API.

The audio world could use a hardware abstraction layer similar to the role OpenGL is playing to enable cross-platform hardware-accelerated rendering. Without a standard, every audio chip company has to optimize its own hardware and fend for itself. The lack of standards slows the innovation necessary to stretch audio applications across the platform.

Always-on, battery life

The next frontier of popular digital virtual assistants such as Amazon Echo or Google Home is identified to be “always-listening” capabilities. Amazon is moving this way through its “tap-then-speak” voice activation. But the device isn’t exactly “always listening.”

Once an always-on/always-listening device goes outside the home, its challenges start to multiply.

Outdoors, its audio processing ability must separate what it needs to hear from background noise.

An even bigger issue is battery life, said Beckmann.

To that end, he pointed out, “Quiescent-sensing MEMS device developed by Vesper, Boston-based startup, is critical.” Beckmann who also works in the Boston area is familiar with what Matt Crowley, Vesper CEO has been doing. Focused on developing piezoelectric-based MEMS microphones, Vesper last month announced an acoustic sensor that uses sound energy to wake a system from full power-down mode.

Crowley said Vesper’s newest piezoelectric MEMS microphone — VM1010 — draws only “3 µA of current while in listening mode.”

At the time of the announcement, Crowley promised a newer version of VM1010 — by the time it becomes a production sample in the fourth quarter this year — will come with a “frequency discrimination” feature. That means system designers can program a MEMS microphone to respond to a specific type of noise — gun shots, glass shattering or human voices.

Inside cars

Back to audio applications inside cars. Voice offers a natural man-machine interface inside a car.

Tier ones and car OEMs are also already heavily dependent on audio processing technology to improve voice quality while a driver is using a speakerphone. Beckmann said, “Cars equipped with multiple speaker channels — ranging from eight up to 32 channels — present a very complex audio system.”

That’s not all. At the advent of electric vehicles, the auto industry began to use fake engine noise — or “e-sound.” Automakers from BMW to Volkswagen are increasingly turning to a sound-boosting bag of tricks. In fact, it isn’t just EVs but today’s more fuel-efficient engines also sound far quieter, and less powerful. Automakers worry that all that quiet might potentially push buyers away.

To many in the automotive industry, audio is familiar territory. Carmakers know that audio can deliver differentiation. Acoustic sensors inside a vehicle can not only listen to what’s going on outside the car, but also its own engine to detect anomalies for diagnostics in the future.

— Junko Yoshida, Chief International Correspondent, EE Times

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News