Ray tracing ripe for embedded devices

Once the puzzle has been completed, consumers will see the full picture and will want to see ray-traced graphics in all their devices. Imagination Technologies was the first to make ray tracing technology a reality. Where our approach differed is that it was designed from the ground up for deployment on embedded hardware within a strict power envelope. In other words, Imagination doing what it does best – making cutting-edge technology work efficiently.

In any article about ray tracing the phrase is quickly followed by the term ‘holy grail’ – as in something that has been long sought after but has always seemed just out of reach. However, we first talked about our ray tracing IP back in 2012, and in 2014 this was followed by the launch of our ray tracing GPU family, a GPU featuring a block dedicated to accelerating ray tracing. This was intended for use in mobile hardware, but for demonstration and ease of development purposes, we had the chip integrated into a PCIe evaluation board, which was running by 2016.

What’s all the fuss about?

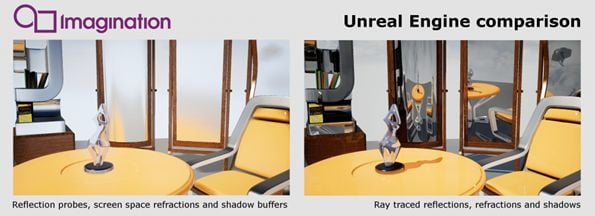

Let’s quickly recap why ray tracing is considered a big deal. If you look at any 3D graphics scene, the level of realism is highly dependent on the lighting. In traditional graphics rendering known as rasterization, light maps and shadow maps are pre-calculated and then applied to the scene to simulate how the scene should look. However, it is, at best, a poor simulation. Ray tracing is different. It mimics how light behaves in the real world.

In real life, a virtual beam of light travels from the source – say the sun if it’s outside – or a light inside a room onto an object. The light will then interact with that object and depending on the surface properties of that object, will then bounce onto another surface. The light will then carry on bouncing around, creating light and shade.

In ray tracing on a computer, or more accurately path tracing, the process is reversed from how light travels in the real world. The light is virtually emitted by the camera viewpoint onto objects in the scene, and algorithms calculate how that light would interact with the surfaces, and then trace that path onto each object back to the light source. The result will be a scene that is lit just like it would be by the sun in the real world: with realistic reflections and shadows.

Traditionally it’s not been possible for a computer device to do this as the computational load was too high, instead relying on rasterization to ‘cheat’. Of course, while we haven’t had it in games, we’re all familiar with how good ray tracing can look. You’ll have seen the results in every 3D animated movie, with fantastic looking characters and scenes with photo-realistic quality. However, these scenes take months to render on dedicated server farms, which doesn’t quite work for games, which must be generated in real-time at a minimum of 30 frames per second.

As discussed, this has never been possible before is because of the huge computational cost involved but Imagination changed the game with a hybrid approach that combined the speed of rasterization with the visual accuracy of ray tracing.

Ready with ray tracing IP

The upshot is that we are very excited that ray tracing is back on the agenda. While ray tracing has been bubbling away under people’s radar, once people see it running on the PC they are certainly going to want it, and indeed expect it, on their mobile devices, VR headsets and consoles.

About the author:

Benny Har-Even is Technical Communications Specialist at Imagination Technologies – www.imgtec.com

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News