Open data fusion platform simplifies development of autonomous driving functions

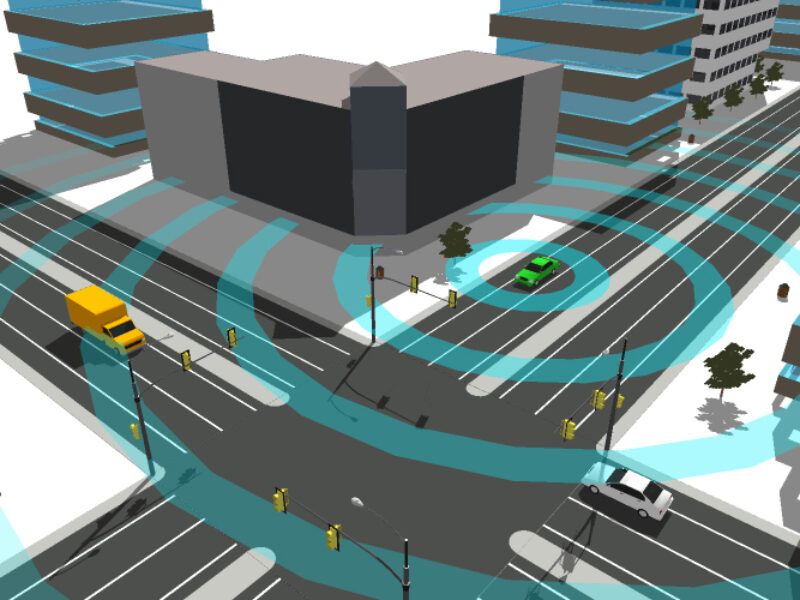

Driver assistance systems such as traffic jam assistants that merge data from two sensors, are already in series production today. However, for fully automated driving, it is necessary for the vehicles to be able to perceive their entire surroundings. This requires the fusion of data from many sensors and cameras to create a complete environmental model that maps the environment with the required accuracy and on which the driving function can be reliably implemented. A challenge here are is the lack of standards for the interfaces between individual sensors and a central control unit. At present, interfaces of driver assistance systems are very function-specific and dependent on the respective supplier or automobile manufacturer. This is exactly where the research project started. The aim was to standardize and standardize the interfaces in order to facilitate sensor data fusion.

After three years, the research project has now been successfully completed with the integration of fully autonomous driving functions in three demonstration vehicles. In the implemented scenario, an electric vehicle drives fully automatically to a free charging station on a parking lot and positions itself above the charging pod. After the charging process has been completed, it automatically searches for a free parking space with no charging pod. “For such highly or fully automated scenarios, only prototypes that were not yet close to series production existed so far,” says Michael Schilling, project manager for pre-development Automated Driving at Hella and network coordinator for the OFP project.

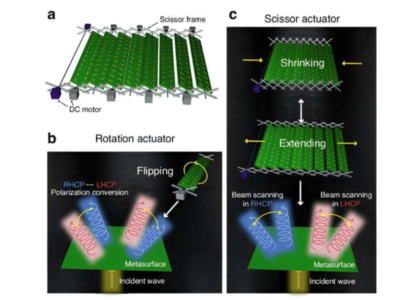

Four cameras and eight 77 GHz radar sensors, each of which covers the entire environment at 360° around the vehicle, served as input for the OFP in the project. An additional Vehicle-to-X-Communication module (V2X module) also enabled communication between vehicles and external infrastructure, such as the charging pods. The partners in the project jointly disclosed the interface descriptions of the individual components in a freely available “Interface Specification”. During the project, an ISO standardization process for the sensor data interfaces was started by the research association together with other leading automobile manufacturers and suppliers.

Upon completion of the project, an updated interface description will be published, which will also be incorporated into the ongoing ISO process. For the first time, all automobile manufacturers and suppliers will be able to integrate their products quickly and easily into the fusion platform.

With the environment model, Hella Aglaia Mobile Vision has developed the central component of the OFP. Using the visualization options, developers can see how the vehicle perceives the entire environment and on this basis decide which sensor data is to be merged. Whether complex driver assistance functions or fully automatic driving functions – all functions can be programmed in this way.

Work on the OFP will continue after the project. Central questions will be how the sensor data can be processed with machine learning in order to improve the functions and further accelerate the development work. The car park scenario is also to be expanded to include urban driving situations or at speeds of over 20 km/h. The development work will also be accelerated. These scenarios require interaction with other sensors, e.g. lidar sensors. It is precisely in this area of multisensory data fusion that OFP is able to exploit its full potential. In addition, functional safety will play a major role in further development to ensure that all functions developed are fail-safe.

The OFP was developed by the network coordinator Hella KgaA together with the German Aerospace Center DLR, Elektrobit, Infineon Technologies, InnoSent, Hella Aglaia Mobile Vision, Reutlingen University, RWTH Aachen University, Streetscooter Research and TWT.

Related articles

Software tool handles embedded ADAS data fusion applications

Renesas sets the course for autonomous driving

Integrated motor controller has large memory for automotive applications

Automotive perception software can deal with the unexpected

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News